Wednesday, December 09, 2009

DB Server Latency can kill data intensive processing systems..

Sounds obvious, yet many forget this…

Take an example that is pretty recent, a prototype spike of functionality was created that for a certain reason had to read a lot of data from many sources, and then write aggregated results a row and sometimes a column at a time. Now there could be millions of pieces of data being written real-time.

The issue here was how to increase performance, especially when doing many thousands of writes to a single database server at a time. In-fact we hit 400ms latency issues with SQL server running out of ports and being able to process requests and connections.

Of course we got performance increase buy running multiple threads of updates at once, but was still at the mercy of the single update/command latency.

Another way around this type of processing was to do all work as batch/set based updates, but there are issues in auditing data.

The best was so far was to create functions inside of SQL Server as native .NET but was platform fixed but also was not as useful as expected.

The above scenario and the many other times I have run into it, is one of the reasons I am looking at Cloud/parallel data systems and also BIG TABLE or NoSQL based data management systems both in memory and on disk.

The landscape is getting interesting, but as always in most cases teh performance we got with SQL is more than enough, however I wanted to see just how much more performance we can get. Especially when looking at very large data integration/merge/match projects or data management and data updates from many 100’s,1000’s, 100000’s of potential systems or users at once.

Infrastructure Scaling-up Vs. Scaling-Out.

It is quite common in web applications or distributed hosted systems to look at issues in scaling your platform to either support more clients or processing speed/power.

There are 2 approaches to upgrading your hardware.

- Scaling-up - the process where a single ‘Iron Server’ is upgraded to a maximum load. This means you upgrade a server to support the maximum CPU/RAM allowed. On Some bare bone ‘Iron Servers’ these statistics can be quite impressive for example a HP Proliant DL785 G5 can be upgrade to 8 CPU sockets, 64 memory sockets, 16 drive bays, 11 potential expansion slots and 6 power supplies to provide the server power.

- Scaling-out – This is where a moderate powered server based setup is augmented by adding more equivalent powered servers Ideally with exactly the same architecture or specification although technology advance make this hard over time.

With the advent of cloud computing hitting a more mainstream market and some proven viability. It is actually quite natural to look at scaling-out a system to take advantage of this newer parallel processing paradigm.

Now there are issues you need to be aware of in both scenario’s so lets start with the obvious issues and yes all are mainly TCO/ROI based points for now. Later I will do some posts on the development and systems impact of these ways of achieving physical scalability. ( I am listing negatives only ).

Scaling-UP

- Cost can be quite prohibitive for a single server albeit a monster as the HP Proliant can be when fully loaded. (I have seen some priced at over 125K+ GBP (200K+ USD)

- Potentially a single point of failure

- Not as optimized for parallel processing as am array/gird of servers and does not scale the same

- Physical limit on scaling (cpu/ram/storage) storage can be made external so is not an issue.

Scaling-Out

- Rack Space/Server space costs more

- Power consumption can be higher for same amount of processor/memory when compared to a scaled-up iron server

- OS and Database Licensing costs can be a lot more expensive, however this is changing with cloud database and application servers.

- Infrastructure to support and manage the servers can cost a lot in both time and money.

Final notes, if the real issue is cost; Although scaling-up seems more expensive do not underestimate the sheer amount of resource costs in supporting many servers, storage of the servers, network infrastructure and so on. The only time scaling out seems to make a real case on cost is when you have a critical mass in scaling to meet client needs, and even then you can cloud/cluster a collection of “iron servers”

Personally, it depends on the situation you are faced with, if cost is an issue but you have some good network infrastructure clouding with a array of servers is a very viable options. But one you start to scale it maybe worthwhile just getting an iron server or 2 and scaling them up in the same network. – Scaling out is really useful when you have a lot of old servers/pc’s that are already inside your infrastructure and can be utilized even if just a little by a cloud or grid based solution.

I have purposely kept aware from the area of server virtualization and provisioning as it can change things drastically. This is also why I have avoided the issue of OS choice as well.

high performance high user websites.

While looking at performance in eCommerce and large social sites on the web I come across a couple surprising profiling reports. The most impressive to me so far at least is that of Plenty of Fish, a very popular free online web dating site that has been priced up at near 1billion USD. this itself id not that surprising in the big stakes of online systems. What is surprising is the amount off hits and users their site gets and more importantly just how little hardware and how simple an architecture their system is. It’s truly beautiful in this day and age of bloat and high expense to implement server arrays and grids.

Here’s some basic stats:

Plenty of fish gets approximately 1.2billon pages/views a month with an average 500,000 unique logins per single day. That's not bad at all.

the System deals out 30+million hits a day, around 500-600 hits a second. Now that is very impressive!

The technology used is ASP.NET at its core,.The server setup can apparently deal with 2 million page views a hour under stress

Now the hardware!!! and this is quite impressive:

2 load balanced web servers (to get past the IIS restriction of 64,000 simultaneous connections at once issue.

3 Database servers, a main account server and 2 others used for searching and at a guess profiling of data. ( I do suspect these are seriously overloaded ‘Iron Servers’ which in some cases can cost easily anywhere between 50-100K GBP)

Despite all of this, it’s pretty impressive a site can reach that levl of performance using so little hardware.

Over the next week or two I will explore similar architectures and also the additional application of cloud/parallel processing across a Service buss to see if anything can get close to those stats (although I don’t have that hardware, some things can be approximated). I do think a virtual cloud running on suitable batch of 5 decent machines should be able to deal with 200-500K web serves an hour with a moderately complex media rich (images not video) dynamically generated system. I will also be looking at issues of transactions over boundaries as this is where I see the biggest hit on performance. (database clusters/clouds accessed by cloud or service applications for example .NET applications or java clients if REST is used)

I will also look at the issue of scaling out Vs Scaling up the physical architecture.

Tuesday, December 08, 2009

DSL memory management and parameter issues…

Ok now, I am doing a little work on the DSL for this blog. I am currently working on the Runtime stack and Activation record support for interpreter memory management when interpreting and executing DSL based code/logic.

Before I go into specifics, I will outline some key concepts/terminology that will make this a lot easier to explain.

- Memory Maps – A memory map contains a collection of memory cells. A memory map is pretty much a key part of an activation record. For now assume a memory map is a collection of variable/parameter data in an activation record for a given function or scope of processing.

- Memory Cells – is where actual data is stored, and is part of a memory map. Here is where the actually details of a variable/parameter is stored and its current value.

- Activation Records – An Activation record exists for each level of scope in the interpreter as it runs code. There is an activation record for each level of a interpreted function call. Even if a recursive function is called there will be a separate activation record for each call. You can also access any activation record above the one you are currently in to access scope wide variables in a chained function call.

Ok now some nuts and bolts, consider the following piece of pseudo code:

START OF PROGRAM

LOCAL VARIABLE StringX EQUALS “String X”

LOCAL VARIABLE StringY EQUALS 2010

PRINT RESULT OF

FUNCTION COMBINE USING StringX ADN StringY

END OF PROGRAM

DEFINE FUNCTION COMBINE (X, Y)

RETRUN StringX+StringY

END OF FUNCTION

The picture below shows a diagram of a memory map and the cells inside of it.

As you can see the top Level of this memory map, shows that 2 variables StringX and StringY where created. Not only that when the function is called, both StringX and StringY are copied into memory map 2 variables X and Y when they are passed as parameters to the COMBINE function. This is an example of pass by value parameter passing.

Ok now that is covered look at the diagram illustrates an example runtime stack with Activation records as it goes through the previous pseudo code.

Now from this example you can see the transient states of the runtime stack as as it runs through the code.

First we just have variables defined in the Main function under AR1 (Active record 1). As the function combine is called, the AR1 active record creates an entry for the result variable (the result of a function call in this pseudo language). and then processing passes to the function COMBINE; which itself creates a couple entries in a new active record AR2. (which you can see is pushed onto the active record stack in the runtime memory system.)

Once processing in the function COMBINE is complete processing is handed back to the calling MAIN program and the Active record AR2 is popped from the stack.

A key thing to remember here is that all Active records can see any data in lower level Active records in the Runtime stack. This becomes very important later when I will explain how to define varying levels scope to a variable in a DSL.

Basic High level design of a text based matrix parser…

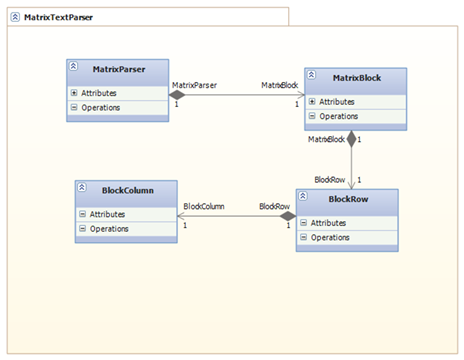

In this post I will introduce the basic architecture for a matrix based text file/stream parser that can deal with file formats in both fixed width notation and delimited formats. Not only that the matrix parser can deal with 1-2-1, 1-2-M and M-2-1 relations ships in the text stream. It can also deal with mixed format files where you have column based entries, CSV formats and also multiple ragged formats of fixed width data.

The concept is in reality quite simple, and if you think about it after looking at the architecture diagram below. Is quite similar to the structure used when creating complex reports in tools liek crystal reports and so on.

The structure is quite simple, here is a text breakdown of the key parts of a matrix parser.

- Scanner

- Token Extractor

- Matrix structure made up of

- Blocks of rows that can be CSV, fixed, column meta-type and made up of

- rows which can contain tokens or made up of

- columns which can contain

- another Block or the data to parse

Below is a high level class diagram for a simplistic Matrix based text parsing library/API. ( the scanning/parsing/tokenizing part is taken out.

I will put up a full architecture later, when I walk through how the scanner/parser and token extractor works.

DSL and logic/rule systems…

Ok another post on logic/rule systems and their implementation through a DSL (Domain Specific Language).

Most rule engines use very simplistic logic for making choices based upon conditions, these systems can easily be created as a DSL with basic IF/THEN/ELSE constructs and then functional processing

These logic systems I refer to as simple procedural logic based systems.

Another area of definition in DSL/Logic/Rule engines is whether the engine itself is to do any processing, or just simple returns the result of a condition as a logical Boolean value or a piece of data. (This would be a very simple DSL, very limited grammar and little else except for an execution based interpreter.)

There are of course many Pro’s and Cons to both ways of doing things, and then there is the hybrid where both techniques are implemented. (this is a simple interpreter focused DSL, with limited grammar and context management and symbol table)

The next level of complexity in a logic system is where you extend the basic syntax to take into account far more complex processing and logical conditions or indeed logic chaining. At essence a lot of these logic/rules engines actually have a full scripting language which is focused on the domain of the logic being looked at.

This is obviously a very clear case for a fully realized DSL with full interpretation support, and if performance is a real issue the DSL should also compile to the platform it is running on. (in .NET that would be to MSIL in the CLR, in the Java World it is to a JVM realized format.)

Finally, there are also 2 ways to implement a DSL besides the pure language syntax approach these are in order of my personal preference.

- Template focused against DSL – this is where you have a logic template and you map values into the template prior to processing, since you are not running all script through a DSL unless you are working with expressions. It has a significant speed increase. If Architected well you outperform DSL interpretation. The reason I prefer this format is that you can also mix in DSL based script at any point, and thus provide incredible flexibility. You can also implement focused parsing techniques to only interpret/error check/process logic for a specific structure of code for a domain. Which also allows a lot of very specific processing to be done within a known sandbox and or context of a system.

- Template boxed no DSL - Otherwise known as lazy DSL, this way of doing things simple means you have an execution layer that has execution profiles like an interpreter but can only do simple logic based on a template, these systems start of very well. However once users start to create their own logic/rules in most cases users will demand more and more control and processing flexibility which necessitates basic DSL and Expression parsing execution.

An example of a boxed template for the IF <condition> THEN <process true result> ELSE <Process false result>

to execute the above you would just have a simple function

Bool ExecuteBoxedIFTHENELSE( conditionExpr, trueProcess, falseProcess) –> Returns logical true/false

{

if ( ExecuteExpression( CondExpr) )

{ExecuteProcess(trueProcess); return true; }

else

{ ExecuteProcess(falseProcess); return false;)

return false;

}

pretty simple, and if you create the functions Execute condition to evaluate and only return a true or false value; you can see how easy it is to either nest this logic and evaluate it.

But the key thing to think about is whether you need to do advanced processing using variables, memory management, context and chaining of rules. It is also very hard to debug this format of rule at runtime inside the system that implemented it, since there is no scanning and interpreting in a traditional manner, thus syntax checking and lookup in a rule builder UI is a challenge to say the least. however for simple rules and logic it is very fast to implement and understand conceptually, whereas writing a DSL is a far more complex task.

Friday, December 04, 2009

Thick Vs. Thin Client Vs Rich Client.

Yet another argument I hear all of the time, crazy really as all clients have their place. However before I put my completely biased view forward lets dispel some far too common myths.

For now this post will focus purely on non-mobile device based (User-Interfaces) and UX. (User-Experiences).

Thick Client Myths

- Better UI – well yes and no it really depends on the tools you use and he skills of your team. I have seen some truely atrocious User Interfaces in my career and the majority of them have been windows/linux thick client based.

- Best User experience – pretty much the same as above, think clients do excel in UI performance providing the workstation it is installed on is powerful enough.

- Weak in distributed computing – I love this one, it does nto come up often but it seems that in all of the PR that web services go in early 2000 actually got people thinking you can only really use distributed data in rich(smart) or web based client UI’s.

- Hard to deploy around a company – My personal favorite, for a long time now there has been a big shift in deployment options across an enterprise (or SMB) where you can have applications auto-install across a network based on user privileges. Not only that applications can also be self updating once installed. This actually get’s around the deployment issue normally associated with think clients. OF course a web browser application is immediately available; However, you must also realize that a web application has a lot of restrictions and also has some pretty big security and deployment issues also.

Web Client Myths

- .Easy to Deploy – This depends entirely upon yor organization and the technologies used on a website. A very good example of this is flash and java enabled applications which need a browsers security to be reduced so these technologies can be run effectively.

- Bad UI features – Where to start, some of the best UI I have seen have been web based. However as technology and user expectation grow. I see more and more web based applications trying to do very complex processing and logical displays in a web front end that really push the web to it’s limits and ultimately fails to deliver a good user experience. Examples of these are UI’s that are screen designers/workflow layout designers. Most of these web applications resort to very large Java code/applications to be installed locally. Or large JavaScript/VBScript files that get processed on every web page and webpage refresh that just means post backs or Async callbacks are slow at best. Also there is the unwritten rule in web UI design that ideally you never go beyond 50-75 UI controls on a single page.

- Good User Experience –This really is a graphic and UX/UI design area. If you have a great design team that understands issues of the backend providing data, and also the REAL practical limitations of a web application. The UX should be good. However, most web applications (not websites) are pretty weak user experiences outside of the pure media and gaming industries.

- Cheap to Implement – This is the biggest pain in my Ass myth. I have never been on web application project that has been cheaper to implement than a Thick client, mobile, rich client application. Most of the time is usually spent trying to get the UI to work well in more than one browser and also to maintain state in a complex page. I have seen systems with workflow designers taking months to complete in a acceptable web experience compared to a think client, again mostly due to state and application control issues. For simple UI/UX the web is amazing fast to prototype and get running, for commercial quality user experience and UI flexibility the web is not the way to go.

Rich Client Myths

- Security – The number one reason for not using a RIA is security, because an application can be downloaded from the web, uses 80% web technology, but seems to run as a standalone thick client like experience on a desktop or in a web browser concerns people. This also leads onto the next myth.

- Deployment – The deployment issue is tied more to security and corporate/company standards for staff workstation more than it is on actual data security issue. The issue here is that a lot of companies do not allow staff to download and install browser plug-in; quite a few even disable flash players. All RIA technologies currently need a client installing in a browser or on the operating system in use. Example RIA frameworks are

So when would I use each of these types of UI, well that will be in a later post, my lunchtime just ran out…

Implementing the Levenshtein distance algorithm

In a previous post I mentioned there are many ways to perform a test on a string to see how closely it can match another string. One of the more interesting algorithms for this is the Levenshtein Distance Algorithm.

This algorithm is named after Vladimir Levenshtein in 1965. It is often used in applications that need to determine how similar or indeed how different two string values are.

As you can guess this is very useful if you every want to implement a basic fuzzy matching algorithm for example. It is also used a lot in spell checking applications to suggest potential word matches.

The Code sample below is in C# but can be translated to any language. It is not optimized at all and is only here to serve as an illustration. It should be noted that there are better and more sophisticated Distance algorithms that you can find via Google or a good book on string comparison algorithms. (there are a lot of ways to re-factor and optimize the following code.

{

public int Execute(String valueOne, String valueTwo)

{

int valueOneLength = valueOne.Length;

int valueTwoLength = valueTwo.Length;

int[,] DistanceMatrix = new int[valueOneLength, valueTwoLength];

for(int i=0; i<valueOneLength; i++)

DistanceMatrix[i, 0] = i;

for(int j=0; j<valueTwoLength; j++)

DistanceMatrix[0, j] = j;

for(int j=1;j<valueTwoLength;j++)

for (int i = 1; i < valueOneLength; i++)

{

if (valueOne[i] == valueTwo[j])

DistanceMatrix[i, j] = DistanceMatrix[i - 1, j - 1];

else

{

int a = DistanceMatrix[i - 1, j] + 1;

int b = DistanceMatrix[i, j - 1] + 1;

int c = DistanceMatrix[i - 1, j - 1] + 1;

DistanceMatrix[i, j] = Math. Min(Math.Min(a,b),c);

}

}

return DistanceMatrix[valueOneLength-1, valueTwoLength-1];

}

}

and the test

public void TestDistanceAlgorithm()

{

LevenshteinDistance x = new LevenshteinDistance();

Xunit.Assert.Equal(3, x.Execute("Sunday", "Saturday"));

}

Tomorrow, I will post an blog entry explaining how you can use this algorithm to match exact or near exact data.

Thursday, December 03, 2009

TDD for the DSL Parser…

Here I will document the TDD aspects of the parser diagramed in a previous post.

I will also use this as a brief guide on how to do TDD in a real application environment.

I will be using xUnit as the testing framework, and Gallio as the test runner. (also for sake of simplicity I am using C# since for some reason F# xUnit tests are not showing up properly in Gallio at the moment.)

The empty test C# file is as below

using System.Collections.Generic;

using System.Linq;

using System.Text;

using Xunit;

/// Blog DSR Parser unit tests

namespace blogParserTests

{

public class ParserTests

{

// Setup or Teardown tests in constructor or destructor

public ParserTests()

{ }

~ParserTests()

{ }

// Tests Start here

// Test batch for Token Class

// Test batch for ScanBuffer Class

// Test batch for Scanner Class

// Test batch for Parser Class

}

}

I am ordering the tests in bottom call stack order, so that all low level classes are tested prior to higher level ones. This is merely my preference for when I look at test results in Gallio or the GUI of xUnit.

Next up its time to put in a few tests, I normally only put in essential tests for the results of method calls and events when writing unit tests. The only time I test other things is if I need to test performance of some code or if I have some business login/formulas that need to be confirmed as working as defined by a client.

Client driven unit tests is normally done when I have a story to implement that does not have a UI but does have a function in a UI or some important back-end system (hidden from the user) processing. This is one of the cleanest ways to get the processing accepted for a story in an Scrum sprint.

OK back to the tests.

When I write tests I always do it in a simple format. An example test is shown below.

[Fact]

public void CreateSimpleToken()

{

// Orchestrate Block

// Activity Block

// Assertion Block

/// Make test fail to start with

Assert.True(false);

}

When I code a single unit test I split it into 3 blocks of code or steps.

- Orchestrate – This is where is setup any variables/objects/data that is needed to perform the specific testing I am doing.

- Activity – This is where I do something that returns data or modifies Orchestrated data as part of the test

- Assertion – This is where I test assertions against data returned or modified in the activity block of the test.

Also when I first create a test, I set it to always fail by default as you can see in the example.

In xUnit you define a test by using the [Fact] attribute.

In Gallio the test looks and fails as shown in the screen shot below.

DSL – Simple Parsing and Scanning Architecture…

In this blog entry I will revisit the DSL that I mentioned in earlier blogs. Except this time I will start to document parts of a simplistic interpreter that could be used as the basis of a DSL.

The DSL syntax will be pretty simplistic to start with. but before I get a head of myself. I should show what the basic high level architecture will look like.

Adaptive Learning Software Systems…

AS mentioned in the preceding post, I have worked on various learning systems in the past. By learning system I mean a technology that learns and adapts to its environment.

For example if a system can detect a re-occurring pattern or conditions, it can learn how to deal with that situation based on previous actions in a probabilistic or deterministic manner.

Most learning systems are able to make for want of a better word, educated guesses at processing tasks, data generation/selection based on historical actions and normally the following three core processing models:

Each of these models stores, tracks and is evaluated based upon how the system or potential logic is used and applied.

The models get quite complex, but are used extensively in many different applications, for example all three models are used extensively in speech recognition applications and VoIP IVR applications.

These techniques can also be applied to almost all application types and are a natural progression beyond expert systems in many cases.

I will do some blog entries later with examples of various models I have used in the past, plus some common public domain models that are available in the Open source community.

It is a very interesting area, although gets very scientific very fast at times.

Adaptive Learning Patterns in MDM.

The Next post I am working on, is in this area. Not sure how much detail to go into just yet!

Wednesday, December 02, 2009

Agile Product Management 101.

Alas, it is always painful dealing with product management that always changes requirements in near real-time.

It is worse when product managers only give very vague requirements and either don't elaborate on the requirements in detail or worse create requirements on the fly as a system is being built and expect these requirements to be implemented as they emerge in a strange real-time like fashion.

The above is quite normal for teams delivering ‘Green Field’ products/projects that start of as prototype technologies and are initially vague at best until certain technology concepts have been proven.

Now the best way to deal with the above scenario in an Agile manner. At a high level is to take the following very basic steps.

- Create a high level feature list

- Normally from any source that the Product Owner/Manager has access to.

- Decompose high business value items into a little more detail. Good to involve an implementation team to do this.

- Get the Implementation team to say what it feels it can implement and demonstrate effectively as implemented within a time box. I prefer every 2 or 3 calendar weeks.

- Do not interrupt the implementation team as they deliver what they committed to.

- If a new requirement is discovered save it til after the time box is complete and the team has delivered its [promise or not.

- Evaluate implementation teams deliverable via a retrospective meeting

- Highlight anything that is wrong

- Highlight the good

- Be constructive

- Plan what will be delivered in the next time box

Rince and Repeat.

Now the key here is how you manage change and deadlines.

A few key things that affect deadlines follow:

- HR related

- Illness

- Personal Issues

- New Staff Hires

- Politics

- Staff Skill limitations

- Technology related – issues in working with technology to implement a feature in a system for example.

- Bugs

- Technology limitations

- Business Related

- Budget changes

- Custom Demo’s for sales/pre-sales/etc.

- Added requirements, Missed requirements

- Never add this to a running time-box

- The list goes on

There will always be commercial deadlines but this is where compromise comes in.

Compromises can be any of the following:

- Add a requirement by dropping another

- Stop current time-box and re-plan a new one

Never add features to an existing implementation teams committed work for a time-box.

It is always better to stop a implementation teams work, and re-plan a new time-box of work team can commit to, Rather than trying to force a team to deliver.

Always, demand to see a demo of what was promised by an implementation team at the end of a time-boxed implementation

Never act as a project manager when you are a product manager.

Never act as a Scrum Master when you are a product manager.

Always be available to the implementation team if they have questions on the product requirements they are working on.

always have a retrospective meeting reference the time-boxed implementation

Always look for good and bad in a time boxed implementation.

Always respect the implementation team, never demand, ask and discuss/negotiate.

You know the product and the business, the implementation team knows the technology and what can be done to implement the product. Both sides have to listen to each other as an equal.

That's it for now.

There are plenty of examples of the above on google if you search for “Scrum Product Management” and “Scrum Product Owner”.

I will do a more defined and clear guide to agile product management later.

Tuesday, December 01, 2009

Very Large Transaction systems…

Well for the past year I have been working on a data management product that can deal with a ridiculous amount of data and data transactions.

The product is a finance industry related product and is designed to work with Financial Market Data and also financial reference data feeds. Some of which can potentially have multi-million entries per file in some extreme cases.

And of course the more feeds you process and match-merge the amount of data transactions (insert, update, removal, indexing, relational mapping and querying) can mean you process exponentially more data.

Now the issue is simply there is only so much processing that can be done at certain parts of a transactional process before you hit some pretty major latency issues and processor performance issues, shortly after these are resolved you tend to hit physical infrastructure issues.

A casing point is using SQL server bulkcopy on an optimized table you can import data at an incredibly speed, however if you need to process that data, mark it up in some way and merge it with other data, performance will nose dive. Add in basic auditing/logging support it will drop again.

Most people resort to two main architectures in this light

- BRBR – Bloody Row by Row Processing

- This is the most flexible way of processing large data and provides many ways to improve speed, but is still along way behind batch processing in all but a couple of scenarios.

- BRBR can be optimized the following ways

- Multi-threading

- Parallel Processing

- Multi-database server

- Cloud and Grid computing and processing

- The above is pretty new, and not that mature yet

- Custom Data storage and retrieval based up data and how it is obtained

- There are a few of these out there including a few very powerful and fast data management and ETL like tools

- BRBR can suffer greatly based upon

- Skill of engineers

- Database technology

- Database latency

- Network latency

- Development tool used

- Batch Processing

- If you are able to just use a single database server you can look at the various ways of doing batch processing

- Batch processing can be Optimized by

- Having a great god like DBA

- Database tool selection

- Good Cloud/grid computing support

- batch Processing can suffer greatly

- Anytime you need to audit data processing that is done in batch data. most of the time you may have to run another batch process or a BRBR process to create audit data that can be generated easier at point of change in a BRBR based system

- Database dependency

- System upgrades

- Visibility into data processing/compliance

That's it for now kind of a messy post.

I will clean this up later, and add a post or two on advances in cloud computing in relation to Very large database processing issues.